Open Source Offers Inspirations

Many big companies contribute significant libraries and allocate resources to work full-time on open source projects. For individual developers, life is not so rosy. They increasingly tire themselves out to make the world a better place. Lack of funds often results in abandoned or border-line maintained projects. In addition, source-code management platforms most of the time equate the maintainer with the owner. Decision processes are not properly defined or catered for. Gazillion times it so happens that the creator of a project goes offline along with the key infrastructures, leaving core developers clueless about what exactly should be done next.

Massi is a developer at heart and very much interested in open-source. Having successfully started several companies in the past, Massi used his entrepreneurial skills to develop Clyste, a platform to enable open-source developers to monetize their contributions. Following a grander vision, he soon decided to have a go at improving the organizational structure of open-source projects.

Massi wanted to empower developers and users to steer the direction of projects including ways to monetize their contributions. Along these lines, Massi kept asking himself about the factors that should be taken into consideration when evaluating a pull request. Contributions can also be non-code related and, as such, a holistic system has to be developed to quantify human interactions. This foray into contribution metrics gave a deep insight into the different moving parts of a project. Most importantly, it made code processes dear to his heart.

What if Reviewers Were Working Twice as Much?

On the other side of the contributing counter lies the code reviewing process. Effective contributions cannot happen unless it passes through code reviews. This is an often neglected aspect that is crucial to even niche security topics as well as code quality. The introduction of code reviews at Google for example was focused on making the product reliable enough to be used in production. At Google code reviewing was introduced for code readability and maintainability. Google’s three key lessons for code reviews are to

- check style consistency and design,

- ensure adequate tests, and

- ensure security by disallowing arbitrary code to be included.

Continuous software changes that do not always follow software quality requirements create technical debt, i.e., the introduction of quick workarounds in the source code that worsen its maintainability. Technical debt makes it even harder to maintain code, leading to a downward spiral.

Chasing bugs and identifying security flaws is a tedious task if done manually. There are many caveats to it, be it the reviewer not having enough expertise, fatigue, or even not being updated with the latest best practices. That’s why reviewers try to integrate tools to identify potential issues before they start reviewing. When pull request authors iron out flagged issues by themselves, this allows reviewers to better focus on the logic, design, and corner-cases. Even at nuclear power plants, the blend of humans and machines is a wonderful mix to prevent disasters. But it is noted that automated reviews in even core aspects are not the rule in the open-source world.

Debugging Is the Missing Stair

Debugging is a very important part of software engineering that every developer needs to pass through. But, finding ways to make this process as best as it can be is not given much thought. A missing stair is a problem that everybody acknowledges exists but chooses to ignore. Rarely do people write code and it works the first time and forever after that. Many patches are the result of identified bugs or edge cases that people experienced and went on to find the cause. Maintainers pass time reviewing usage reports, simulating the bug, and finding if the solution is effective. Writing code includes debugging in the process but due to it being an interlaced process, it gets less attention as a process of its own. Ostensibly, developers spend a whopping 50% of their time debugging.

Robust Tech and Bold Steps Bring Amazing Results

Massi views code reviewing and debugging as primary processes in the modern software world. Clyste, the open-source platform, researched AI and blockchain as possible solutions for the governability and funding problems that projects faced. Around this time, NEC X asked Massi to lead one of their labs. Massi felt that it was time to devote more attention to code reviews. He had the rough outline of a tool that would identify simple issues and provide fixes. It would be wonderful if this process could be streamlined to kick out as many bugs as possible. If code reviews can be streamlined, existing codebases can benefit from such a process, post-mortem of course.

Typically, there exist tools to catch bugs and fix them. Most of the time this is done separately by integrating different tools. Some tools check for logic and flow, others for security audits. Yet other tools check for consistency. Emboldened by the heuristics of Clyste, Massi decided that AI can be a great addition to a debugging tool. Most static code analysis tools on the market are rule-based. They identify issues based on a series of preset rules. This gives quick results and also allows people to lay their hands on a proof of concept fast. But, at scale, rule-based systems lose. It becomes a super-mega-human effort to stay at the top of updates, cover a wide range of issues and isolate fixes.

There is also the problem of precision. Various studies did

show the limits of existing tools and techniques. Many tools are simply not accurate. Others identify issues but not code smells. As a consequence, these tools are used with a best to nothing attitude or are outright rejected, Massi’s vision was to really solve the debugging problem. The machine learning approach on the other hand established some good grounds for further experimentations. Studies like Arcelli Fontana and Zanoni (2017) or Shcherban et al. (2020) for example painted bright pictures.

He decided to go for AI against conventional solutions, facing the high improbability of success, the voracious demands of machine learning, and the meta nature of the task. And thus, Metabob was born.

The Challenges of Solving Debugging With AI

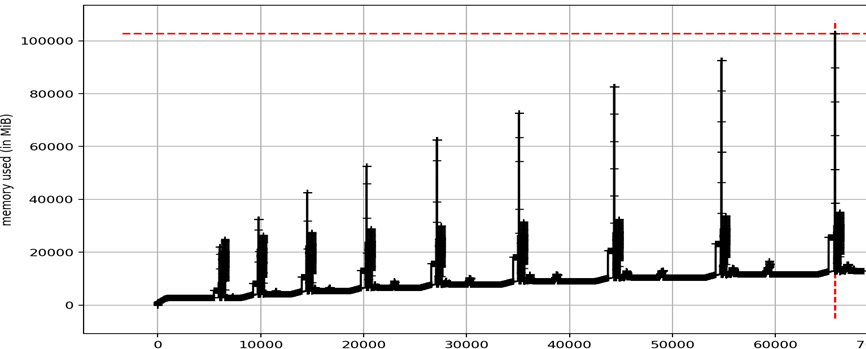

Conceiving a debugging tool has its own set of challenges. What if we had a great tool that flagged issues but had an issue for each line? For a 1,000-long line codebase, we would have 1,000 alerts to read. That’s why the alert density must be at an acceptable level. Alerts must not be noise. Should only the most crucial alerts be shown? It is a tricky concept to get right. Now, going through issues should require minimal work. If we have many alerts and we have to manually fix them, this increases the time it takes to solve issues as well. In other words, a tool should ideally be able to suggest automatic code fixes.

Machine learning is not a magic switch. It’s not an approach that solves problems in a snap. There are a huge number of machine learning models, so first it is important to find the best one for the task. It takes some insights, experiments and exploration to settle on a model. But that’s not all. Data is needed, a huge amount of it. And it’s not only about finding the data but then labeling it. At scale, a process should be devised to label the data automatically if possible. The explanations given should be succinct and relevant. The tool must not just try to autocomplete what it was trained on.

It’s by far a shot in the dark. It’s a work of continuous refinement. Down the road it led the engineers to talk with researchers. The tool required deeper expertise than what some random trials can cough up. Tuning an AI setup has so many factors to take into consideration that it’s important knowing what to do.

A Great Team Goes a Long Way

That was the turning point where Massi felt that such a problem cannot be solved alone. He invited Avi, a friend to come work on the tool and another would-be FB engineer, both researchers at the time. Avi went on to become the CTO. But more specialized help was needed. Ben, an NLP engineer with 30+ years of experience was brought in. And soon, the product came to life. Over time, the marketing department was groomed and UI/UX was given attention.

Massi believes that an ideal team can greatly take lessons from the open-source world. Remote is a great mode of working to tap into a global pool of diverse skills. People involved in open-source contributions can be incredible hires. Working hard and passionately on a topic to make an impact by providing value to the software community encompasses the spirit of the whole enterprise.

Conclusion

Transitioning Metabob into an SaaS tool free of charge for individual devs allows the distribution and integration of the resulting product in many codebases. The goal is to make Metabob a developer-first tool as opposed to a company-first tool. Caring for humans at the end benefits the structure. The intersection of machine learning with language engineering is a daring feat. When talking with industry experts and competitors, Massi realized that he is on the right track. When asked what kept him going through the walls of the unknown, Massi replied: belief.